“Emotional" algorithms for artworks

Recognizing images

A key problem in computer science is teaching computers to “understand” images. Traditionally this means developing algorithms that would be capable, given an image of detecting whether it contains attributes such as faces or common objects such as chairs, airplanes, etc.

Early works have tried to address this problem by developing complex hand-crafted rules. More modern systems rely on machine learning techniques that take a large amount of annotated data and try to learn the rules from this data. From this learning, the algorithm is able to analyze new images that had not been annotated before.

From recognition to emotion

Datasets used to "train" existing learning algorithms, however, contain only "objective" or fact-based information about images content. In contrast, human perception is much more complex and also has an "emotional" or affective component - certain images make us happy, while others can make us sad or even angry. It is impossible to capture the effect that Picasso’s Guernica might have on someone, for example, simply by listing all of the objects that it depicts.

And it is precisely this challenge that Panos Achlioptas, PhD student at Stanford University, Leonidas Guibas, his thesis director, and Maks Ovsjanikov, a professor at the Computer Science Laboratory of the Ecole Polytechnique (LIX*) who had also completed his thesis under Leonidas Guidas supervision, sought to meet. This trio was joined by Mohamed Elhoseiny and Kilichbek Haydarov, both researchers at the King Abdullah University of Science and Technology (KAUST in Saudi Arabia) because of their expertise in the analysis of artworks.

" In our work, we set out to create a new dataset and associated machine learning models that embrace the subjectivity and emotional effect of perception" explains Maks Ovsjanikov whose work is part of his EXPROTEA project funded by an ERC Starting Grant.

Thousands of comments to learn from

" All members of the group have strong experience in machine learning, but this is the first project of this kind for us. We focused on the artwork because it is often created explicitly to elicit a strong emotional response," says the researcher.

To create the database that the algorithms had to learn, they used more than 80,000 works of art on which people online created annotations of both the emotion they felt while seeing the work and also a brief explanation of why.

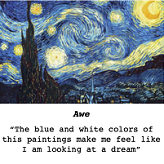

This has led to an entirely new dataset, that they called ArtEmis, containing over 439000 incredibly creative and diverse explanations that link visual attributes to psychological states and include imaginative or metaphorical descriptions of objects that might not even directly appear in the image (e.g., “it reminds me of my grandmother”)

Using this new dataset, researchers have developed novel deep learning methods that can produce plausible "emotional" explanations for new images not found in the initial dataset.

The annotations produced by these new systems are richer than those by standard computer systems and sometimes look remarkably human (for example, "this painting makes me feel like I am looking at a dream").

"While we are still far from modeling human perception, our hope is that these systems will help to shed light on the emotional effect of images and ultimately even contribute to creation of novel artworks", imagines Maks Ovsjanikov.

*LIX: a joint research unit CNRS, École Polytechnique - Institut Polytechnique de Paris